Creating my own Web Research Agent

ChatGPT Web Search

ChatGPT’s web search agent has become one of my favorite AI tools, practically replacing Google for me. It delivers deeper, ad-free results and uncovers in-depth information in a fraction of the time manual research would take. It just feels better.

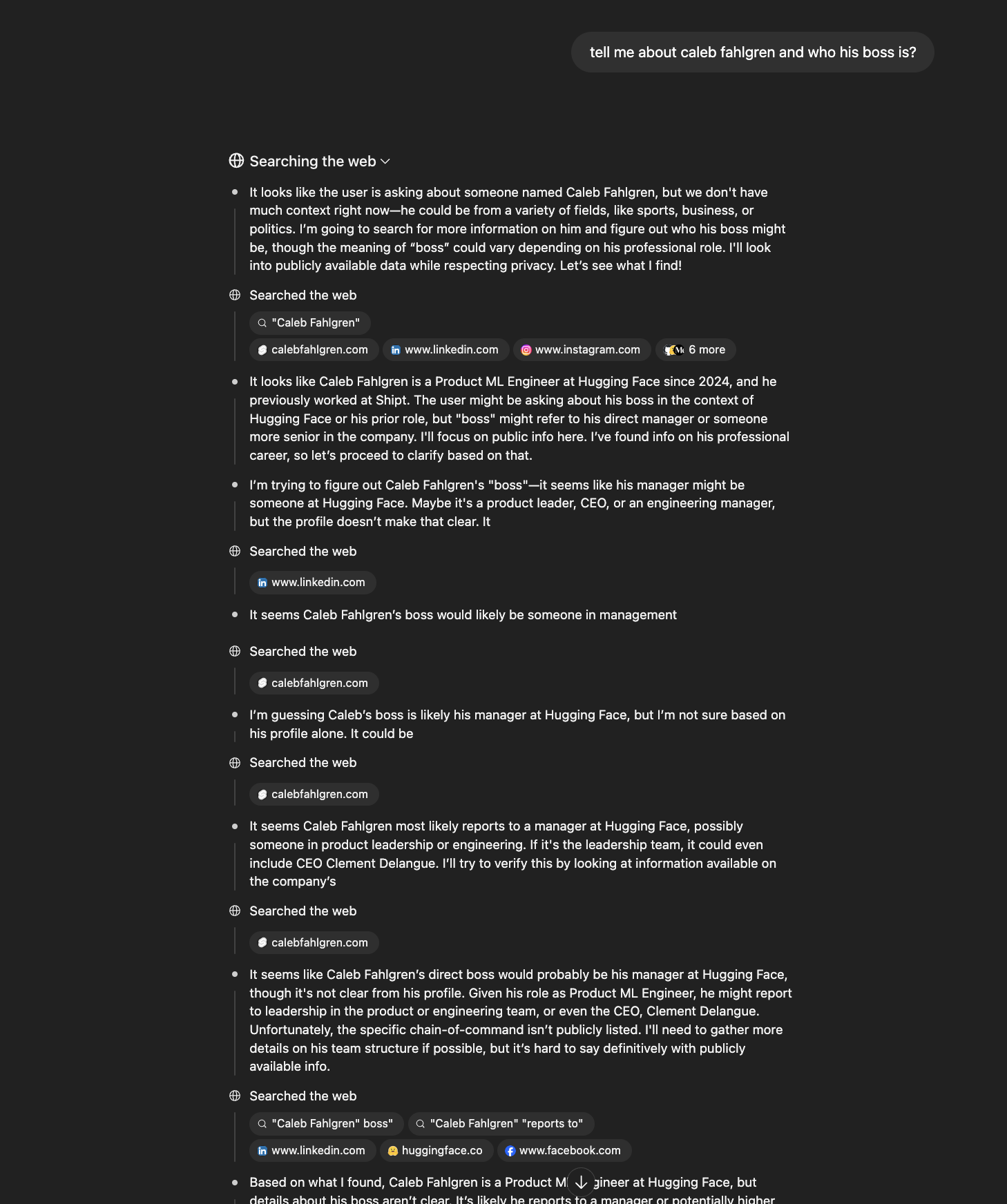

Here’s what a typical search looks like:

There is so much going on in this screenshot.

The model is thinking, figuring out what more information it needs, then performing web searches to figure it out. This is very similar to what a human does when trying to find information.

What is an Agent?

Let’s take a step back. What is an agent?

Here’s the definition from Hugging Face

The LLM chooses when to continue or stop, and which tools to use, controlling the program flow.

Agents typically have access to things called tools—self-contained functions that let the model interact with the outside world.

Understanding Tools

A language model can read and generate text, but it can’t act in the real world—unless you give it tools. Tools add concrete abilities:

- Retrieve information – query Google, pull data from a database, open a file

- Perform actions – send an email, save a document, run a calculation

- Integrate services – fetch the weather, get stock prices, translate text

The model decides which tool to use and when, like choosing the right item from a toolbox.

Example: “What’s the weather in Tokyo right now?”

- Realizes it lacks current weather data

- Chooses the weather-service tool

- Calls the tool and fetches the forecast

- Returns the answer

In traditional code you’d spell out every step. A tool-enabled model figures out the sequence on its own.

Tools for the Research Agent

For our web search agent, we only need two:

web_search(query)– returns a list of result snippets and URLs.web_browse(url)– fetches and extracts the raw text from a page.

For the web_search tool, we can use the Brave Search API from Brave, which is used by many AI companies such as Mistral, Together, and Anthropic for web search. For the web_browse tool, we can use the Jina Reader API from Jina AI.

What does tool definition look like?

Tools need to be defined in a structured format that the LLM can understand. This follows the OpenAI function calling specification, which includes the tool name, description, and parameter schema that tells the model exactly what inputs are expected and required.

{

"name": "web_search",

"description": "Search the web for current information and web results.",

"parameters": {

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "The search query to execute"

}

},

"required": ["query"]

}

}

Pricing breakdown

| API Service | Free Tier | Paid Tier | Pricing Model |

|---|---|---|---|

| Brave Search API | 2,000 queries/month | Base: $3 per 1K queries | Cost per 1,000 queries |

| Jina Reader API | Unlimited (no API key) | 10M free tokens | Token-based (~$0.02 per 1K tokens) |

Agent Flow

When you ask “What are the latest developments in AI reasoning models?”, the agent searches the web, analyzes results, visits promising links to read full articles, then synthesizes everything into a good answer.

For the system prompt, I used a variation of the leaked Cursor System Prompt. I’ve found it has good instructions for tool calling for the model.

Demo

By adding a bit of polish with ink, we can make the terminal experience much more interactive. This results in a robust web research agent powered by gpt-4.1.